Fairness Measures in AI Product Development: Why They Matter More Than Ever

Artificial Intelligence is changing the way products are designed, used, and trusted. From hiring tools to medical diagnosis systems, AI is influencing life-changing decisions every day. But here’s the problem: AI isn’t automatically fair. If the data behind it is biased, the outputs will reflect that bias. That’s where this question what purpose do fairness measures serve in ai product development? step in they help us catch, measure, and fix problems before they harm people.

In this article, I’ll walk you through what fairness measures mean, why they’re important, the different ways we can measure fairness, and how businesses can build more responsible AI systems. Along the way, we’ll look at real examples, current regulations, and some practical steps developers and organizations can take right now.

Table of Contents

What Do We Mean by Fairness in AI?

At its core, AI fairness is about making sure systems treat people equitably. Imagine applying for a job and being rejected by an AI hiring tool not because you lacked the skills, but because the system learned historical hiring patterns that favored men over women. That’s not fair, and it’s not acceptable.

Fairness measures are essentially the tools, metrics, and methods developers use to detect and reduce AI bias. They’re not just a technical fix they’re part of the broader field of ethical AI and responsible AI, which aim to make technology align with human values.

It’s also worth noting that fairness is not a one-size-fits-all concept. Different industries, cultures, and laws define fairness differently. For example, what counts as “fair” in U.S. employment law might not be the same in Europe or Asia. That’s why building fairness into AI is so complex but also so important.

Why Fairness Measures Matter in AI Product Development

Let’s face it: an AI product that people don’t trust won’t succeed. Whether you’re building a healthcare app, a financial service, or an educational tool, fairness is directly tied to adoption.

Here are a few reasons fairness measures are essential in AI product development:

- User trust and adoption – People are quick to reject systems they believe are unfair. Fairness is directly linked to user confidence.

- Legal compliance – Regulations like the EU AI Act, GDPR, and the U.S. AI Bill of Rights are making AI accountability a legal requirement.

- Business reputation – Companies that ignore fairness risk lawsuits, fines, and reputational damage. Remember the Amazon hiring AI scandal? That tool was scrapped after showing gender bias in candidate selection.

- Balanced performance – A model that works well for one group but poorly for another is a flawed product. Fairness measures ensure performance across diverse users.

This connects to a bigger question I’ve explored in another post: [Has AI Gone Too Far?]. The fear often comes from systems that make decisions without fairness checks. When AI is used responsibly, it becomes a tool for inclusion rather than exclusion.

Types of Bias in AI Systems

Before we can talk about fairness measures, we need to understand the kinds of bias that creep into AI. Some of the most common include:

- Data bias in AI – If your dataset overrepresents one group and underrepresents another, the AI will likely perform better for the majority group.

- Algorithmic bias – Even if data is balanced, the model itself can amplify subtle patterns unfairly.

- Bias in machine learning feedback loops – User interactions can reinforce bias (think of a recommender system that keeps pushing one type of content).

- Deployment bias – An AI system might be used in contexts it wasn’t designed for, leading to unfair results.

These biases don’t always come from malice they often come from oversight. That’s why algorithmic bias detection and monitoring are critical steps in any AI lifecycle.

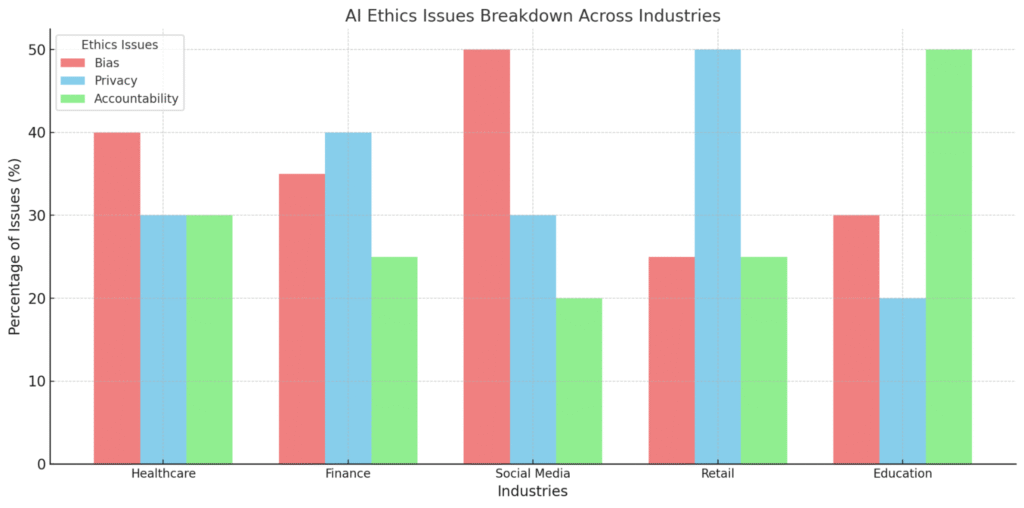

AI bias in action

In 2025, the problem of AI bias is no longer just a theoretical concern; it’s a measurable issue with real-world consequences, costing businesses and harming users.

A prime example is the healthcare sector, where a widely-used algorithm was found to systematically under-identify Black patients for high-risk care management programs. This occurred because the model used historical healthcare spending as a proxy for a patient’s need, and since less money has been historically spent on Black patients with similar conditions, they were flagged as lower risk, leading to delayed or denied treatment. This is a clear case of how data bias in AI can perpetuate existing societal inequalities. Furthermore, fairness drift, where an initially fair AI model becomes biased over time, has emerged as a significant challenge, requiring continuous monitoring and real-time intervention to prevent harm.

How Do We Measure Fairness?

This is where things get a little technical, but stick with me it’s the heart of the topic. Researchers and developers use AI fairness metrics to quantify how fair a system is. Some of the most widely discussed measures include:

- Statistical parity (demographic parity) – Ensures outcomes are equally distributed across groups.

- Equal opportunity – Everyone should have an equal chance at a correct prediction.

- Equalized odds – Errors (false positives and false negatives) are balanced across groups.

- Predictive parity – A prediction should be equally reliable regardless of group.

- Counterfactual fairness – If we changed a sensitive attribute (like gender), the decision should remain the same.

No single metric works for every situation. In fact, some fairness metrics directly conflict with each other. Choosing the right one depends on context which is why fairness is as much a policy question as a technical one.

Methods for Implementing Fairness in AI

Now that we know how to measure fairness, let’s talk about how to actually mitigate AI bias. Broadly, there are three stages where fairness can be introduced:

- Data level

- Re-sampling underrepresented groups

- Re-weighting data

- Using synthetic data to fill gaps

- Model level

- Adding fairness constraints during training

- Designing algorithms that prioritize balanced outcomes

- Post-processing level

- Adjusting results after predictions

- Using thresholding techniques to balance outputs

In addition, developers can rely on open-source tools like IBM’s AI Fairness 360, Microsoft Fairlearn, and Google’s What-If Tool. These provide frameworks for bias testing and algorithmic bias detection in practice.

And let’s not forget the human side: inclusive AI design means bringing diverse voices into the development process, from data collection to testing.

Trade Offs and Challenges

Here’s the reality: you can’t maximize fairness and accuracy at the same time in every case. Sometimes improving fairness reduces predictive accuracy slightly. But does that mean it’s not worth it? Absolutely not. A system that’s 98% accurate for one group and 70% for another isn’t “accurate” at all it’s discriminatory.

Other challenges include:

- Global variation – Fairness in AI isn’t universal. What counts as fair in one country may not in another.

- Explainability – Even when fairness measures work, they’re often hard to explain. This is where explainable AI (XAI) becomes crucial.

- Dynamic bias – Bias can creep back in over time, meaning fairness monitoring has to be ongoing.

This ties closely to something I’ve discussed in [How to Avoid AI Taking Your Job?] fairness also applies to how AI impacts workers and industries. If we don’t implement fairness checks, job automation risks becoming deeply unequal.

Real-World Applications of Fairness Measures

Fairness isn’t abstract it shows up in everyday AI products. Here are a few places where fairness measures play a critical role:

- Hiring & HR tools – Screening resumes fairly across gender, race, or background.

- Healthcare AI – Ensuring diagnosis models don’t miss conditions in minority groups.

- Finance – Preventing discrimination in credit scoring and loan approvals.

- Law enforcement – Addressing controversies in predictive policing systems.

These examples highlight why fairness isn’t just “nice to have.” It’s an essential safeguard for building responsible AI that impacts millions of lives.

The Regulatory and Ethical Landscape

As of 2025, fairness in AI isn’t just ethical it’s becoming a legal requirement. A few major developments include:

- EU AI Act – Requires high-risk AI systems to undergo fairness checks and documentation.

- U.S. AI Bill of Rights – Outlines principles for safe, transparent, and fair AI use.

- ISO/IEC Standards – International guidelines for fairness, transparency, and AI governance.

Businesses can no longer ignore fairness without facing real consequences. Building AI accountability into development cycles is as important as testing for security or performance.

Best Practices for Developers and Organizations

So how can developers and companies build fairness into their AI systems? Here are some practical steps:

- Collect diverse, representative datasets.

- Regularly test using multiple fairness in machine learning metrics.

- Incorporate inclusive AI design from the ground up.

- Use explainable AI (XAI) techniques to make fairness decisions transparent.

- Monitor continuously — fairness is not a one-time project.

And if you’re working with AI in your own career or business, I recommend checking out my post [How to Cautiously Use AI for Work]. It’s all about balancing the benefits of AI while staying aware of risks fairness being one of the biggest.

The Future of Fairness in AI

Looking ahead, fairness measures will only grow in importance. We’re moving toward:

- Automated fairness auditing tools.

- Stronger global standards for AI ethics.

- Fairness as a competitive advantage (users will choose AI products they trust).

- More emphasis on transparency and AI governance.

In other words: fairness isn’t just about doing the right thing. It’s about making AI products sustainable, adoptable, and future-proof.

Conclusion

To wrap it up: fairness measures in AI product development are not optional they’re essential. From bias in machine learning to data bias in AI, unchecked problems can harm users, damage businesses, and erode trust. By embracing AI fairness metrics, algorithmic fairness, and responsible AI practices, developers can create systems that are both powerful and equitable.

In the end, what purpose do fairness measures serve in ai product development? AI that isn’t fair isn’t really intelligent. It’s short-sighted. True innovation lies in building systems that serve everyone equally. That’s the promise of ethical AI, and it’s what will separate the winners from the rest in the years to come.

Can fairness in AI ever be 100% guaranteed?

No fairness can’t be absolute, because definitions of fairness vary across cultures, industries, and laws. The goal is to minimize bias as much as possible and keep monitoring it over time.

How do developers decide which fairness metric to use?

It depends on the use case. For example, in healthcare, equal opportunity may matter most, while in credit scoring, predictive parity is often prioritized. Context drives the choice.

Do fairness measures reduce AI accuracy?

Sometimes there’s a trade-off, but a system that’s only accurate for one group isn’t truly accurate. Fairness measures balance accuracy across all users, making the product more reliable overall.

Who is responsible for ensuring fairness in AI?

It’s a shared responsibility: developers, data scientists, business leaders, and regulators all play a role. Fairness isn’t just a technical issue it’s also organizational and ethical.

What’s the easiest way for companies to start with fairness measures?

Begin with bias audits on existing AI models, use open-source tools like AI Fairness 360, and include diverse voices in product design. Even small steps can reveal hidden issues.

- How AI Agents Help In Daily Tasks

- AI Agent Examples & Use Cases

- AI Agents vs ChatGPT Key Differences Explained

- How Do AI Agents Work?

- AI Agents for Beginners: What They Are & How They Work

- conversational AI chatbot vs assistants employee experience

Leave a Comment