has ai gone too far? Why This Question Is Everywhere Now

Are you wondering has ai gone too far Everywhere you look, artificial intelligence is showing off writing code, painting portraits, answering questions faster than most humans can think. It’s impressive, even exciting. But beneath the hype, there’s a growing sense of discomfort. From deepfakes spreading misinformation to AI tools replacing creative jobs, it’s hard not to wonder: has ai gone too far? This question isn’t just about fear or resistance to change it’s about figuring out where the line is, or if one even exists. As AI moves from helpful assistant to something more autonomous and unpredictable, we need to ask ourselves what kind of future we’re really building. Are we still in control, or are we racing forward just because we can? Let’s unpack the good, the bad, and the genuinely weird sides of AI and see if we’ve already crossed a line we can’t uncross.

If you are new to the AI world and need a quick overview then read my exclusive post on What is AI

Table of Contents

The Speed of AI Advancement: Too Fast for Comfort?

Let’s be honest it feels like AI went from “kind of cool” to “wait, what just happened?” in record time. Just a few years ago, most of us were asking “what is AI, really?” Now it’s writing novels, generating videos from text, and even passing medical exams. Tools like ChatGPT and Midjourney aren’t just impressive they’re evolving faster than we can keep up. And that’s exactly what has people nervous. When something becomes this powerful, this quickly, it can start to feel like we’re losing the steering wheel.

It’s not just about being impressed it’s about being prepared. With AI popping up in every industry, a lot of people are trying to figure out how to work with it instead of against it. That means learning when to lean on AI tools, and when to draw the line. If you’re using AI at work or even just testing the waters it helps to have a clear strategy. In one of our earlier posts, we explored how to use AI cautiously without giving up too much control. Because let’s be real: if you’re not intentional, it’s easy to go from using AI to depending on it.

The point is, AI’s progress isn’t slowing down. But maybe just maybe we need to slow down our blind trust in it.

The Good Side: AI Changing Lives for the Better

For all the headlines about AI taking over, let’s not forget: it’s also doing a lot of good. In healthcare, AI is helping doctors catch diseases earlier, personalize treatment plans, and even discover new drugs faster than ever. In education, it’s making learning more accessible from tutoring tools to language apps that adapt to each student’s pace. And for people with disabilities, AI-powered tech like real-time speech-to-text or visual recognition is opening up new levels of independence.

Even in everyday life, tools like smart assistants, AI-generated productivity hacks, and language models are helping people save time, create content, and solve problems faster. For creatives, AI isn’t just competition it’s a powerful collaborator. Artists, writers, and musicians are using it to explore new ideas and break through creative blocks.

So no, it’s not all doom and gloom. AI can be a force for good when it’s used with the right intentions and boundaries. The trouble is, the line between helpful and harmful isn’t always clear. And in some cases… we’ve already seen it crossed.

Crossing the Line? Where AI Might’ve Gone Too Far

This is where things get a little uncomfortable. While AI has brought a lot of good, some uses genuinely make you stop and think: Maybe this has gone too far.

Let’s start with deepfakes realistic but completely fake videos generated by AI. At first, they were used for harmless fun. But now? They’re being weaponized to spread misinformation, impersonate public figures, and even commit fraud. It’s not just creepy it’s dangerous. The line between truth and fiction is starting to blur, and fast.

Then there’s mass surveillance. In some countries, AI-powered facial recognition is used to track citizens in real time. It’s sold as “security,” but when you zoom out, it starts to look more like privacy erosion on a massive scale. Who’s watching the watchers?

Even in the world of creativity writing, art, music AI is raising eyebrows. Sure, it can generate impressive content. But at what cost? Artists and writers are seeing their work scraped, mimicked, and replaced. It’s sparked a real debate about what creativity even means and whether machines can (or should) take over that space.

And let’s not ignore the scariest part: AI in warfare. Autonomous drones, predictive targeting, machines deciding life or death. It’s not science fiction anymore it’s happening now, with very little global regulation.

All of this raises a hard truth: Has AI gone too far, or are we just not setting the right boundaries? The tech itself isn’t evil but the way it’s being used (and abused) in some areas crosses more than a few ethical lines.

If we don’t start drawing limits soon, we might look back and realize we passed the tipping point without even noticing.

Ethical Dilemmas We Can’t Ignore

As AI gets smarter, the ethical problems get harder to ignore. Take accountability, for example. In 2020, an AI-powered recruitment tool used by Amazon was scrapped after it was found to be biased against women. No one had intended to discriminate but the algorithm learned it from historical hiring data. So when things go wrong, who’s actually responsible? The developers? The data? The AI?

Bias is everywhere in AI. Facial recognition systems have misidentified people of color at much higher rates even leading to false arrests in the U.S. These aren’t just bugs they’re life-altering mistakes caused by flawed training data.

Transparency is another big issue. Tools like GPT and DALL·E can generate shockingly realistic outputs, but even the creators don’t fully understand how these systems make certain decisions. That’s a serious concern when AI is used in healthcare, finance, or legal cases.

And then there’s consent. Many generative AI models were trained using publicly available images, writing, and voices without permission. That’s why artists and writers are pushing back, filing lawsuits against companies using their work to train AI tools that could eventually replace them.

So again, has AI gone too far or are we just not ready to deal with the consequences of what we’ve created?

The Human Cost: Jobs, Identity, and Control

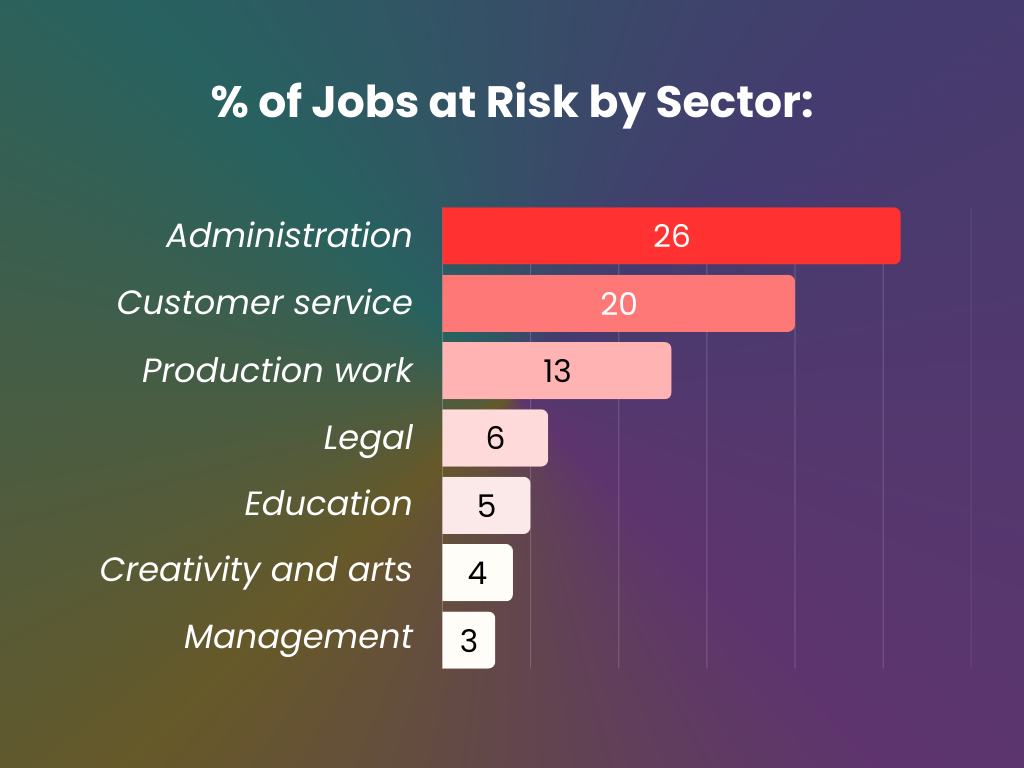

For all the talk about how AI boosts productivity, there’s a quieter, more personal cost that doesn’t always make headlines and it’s starting to show. From artists and writers to customer service reps and coders, people are asking the same question: What happens to my job when AI can do it faster and cheaper?

In 2023, media company CNET quietly started publishing AI-written articles. Several were found to contain serious factual errors, but the move was clear: automation wasn’t just coming for blue-collar jobs anymore. It was knocking on the doors of journalists, designers, and other creative workers. AI taking jobs isn’t a distant threat it’s already here, just in subtle waves.

But beyond employment, there’s something even deeper: our sense of identity and control. If AI can create art, write stories, make music what does that mean for human creativity? And when companies rely more on algorithms to make decisions, what happens to human judgment?

If you’ve ever wondered how to avoid AI taking your job, it starts with learning how to work with it not compete against it. But the bigger question remains: if we let AI keep replacing the human touch, are we slowly giving up more than just tasks?

Can We Put the Genie Back in the Bottle?

A few months ago, I saw a viral video of an AI-generated celebrity saying things they never said and for a second, I believed it was real. That moment stuck with me. If something that fake can feel that real, how are we supposed to trust anything anymore?

That’s the problem. AI has moved so fast, governments and regulators are scrambling to catch up. Some countries, like the EU, are pushing forward with AI regulation laws aimed at transparency and safety. But globally? It’s a bit of a mess. There’s no unified playbook, no clear limits, and not nearly enough oversight.

The truth is, it’s really hard to control AI once it’s already out there especially when the most powerful tools are in the hands of private companies or open-source communities.

We can’t shove the genie back in the bottle. But we can start drawing some lines before the magic turns into mayhem.

Can AI Be Powerful and Responsible?

Here’s the thing AI itself isn’t evil. It’s just a tool. Like a hammer, it can build a home or break a window. The real question is, who’s holding it? And do they care about what happens next?

There’s a growing movement pushing for responsible AI development where transparency, ethics, and human impact are baked into the process, not just added on later. Companies like Anthropic are experimenting with AI “constitutional” models. Others are calling for more open-source frameworks so the public can actually see how decisions are made. That kind of AI transparency could be a game-changer.

But tech alone won’t fix this. It’s going to take public pressure, ethical leadership, and a willingness to say “no” even when something looks impressive.

So, yes, AI can be powerful and responsible but only if we stop treating it like a toy and start treating it like the world-shaping force it actually is.

Conclusion: So, Has AI Gone Too Far?

After looking at it from every angle the speed, the benefits, the risks, and the ethical mess it’s dragging behind the honest answer is: it depends. Has AI gone too far? In some ways, yes. When deepfakes are confusing reality, when jobs are quietly being replaced, and when decisions are being made by black-box systems no one can fully explain it definitely feels like we’ve crossed a line.

But the truth is, it’s not just about AI. It’s about us. The tech didn’t evolve on its own. We built it, we released it, and now we’re learning sometimes the hard way how powerful it really is.

So maybe the better question is: Are we ready to take responsibility for it?

Because if we are, there’s still time to shape AI into something that works for us not around us, or worse, without us. so has ai gone too far?…

Can AI really make decisions on its own?

Yes some advanced AI systems can make decisions without human input, especially in areas like finance or security. The problem is, we don’t always know how they decide.

Is it still safe to use AI tools for everyday tasks?

For most things like writing, organizing, or research, AI is still very useful. The key is to stay aware of what data you’re sharing and not rely on it blindly.

Why do some people trust AI more than humans?

Because AI can feel “neutral” or faster, people assume it’s smarter or less biased but that’s not always true. AI reflects the data it’s trained on, and that can be flawed.

Are kids and teens being affected by AI too?

Definitely. From AI-generated content on social media to deepfake videos and personalized algorithms, younger users are interacting with AI constantly often without realizing it.

Should I be worried about AI replacing my job?

Not if you learn how to work with it. Jobs are changing, not disappearing overnight. The real threat is not adapting not the tech itself.

Leave a Comment